Predicting the Future of Text Technologies

How can we analyze five millennia of text technologies from pre-tablet technologies to digital devices? In our project we decided to tackle these huge questions with big data and ventured to gather data on the whole history of human text-based communication. Using Text Technological techniques and theories, we assembled a dataset that measures key criteria common to all communication technologies. Combining domain knowledge with methods expertise, we have assembled a multidisciplinary team to tackle the opportunities created by our datasets. One of the first things we noticed is that no comprehensive or standardized metadata system exists to describe text technologies comparatively, so we designed and created one. In our schema we have included not only basic features like dimensions, date of creation, and size, but we have also calculated capacity, portability, content permanence and geographical spread to provide access to one of the best curated datasets in scholarship to date. We have analyzed, measured and catalogued textual objects using these criteria and are now moving to the validation phase with large open access datasets from heritage repositories around the world.

The CoDEX

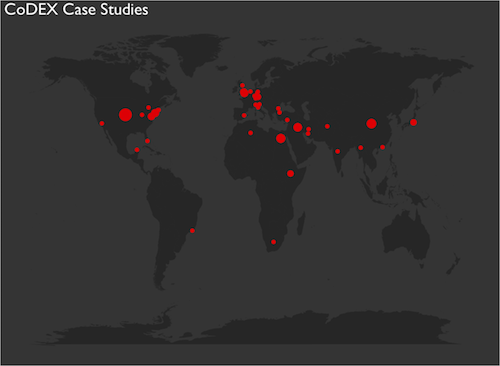

The traditional narrative of book history paid little attention to non-European text technologies. We have taken a different approach. We have constructed our database to include case studies from all over the world, achieving a truly global perspective. This is how CoDEX (the Comparative Data Experiment) was born: our curated database of case studies encompassing the whole of text technologies’ history and reach. Stretching from 3200 BC to 2017 AD, the database includes examples of all major types of text technologies, categorized using our metadata schema, all machine-readable but controlled by humans. To avoid unconscious biases we compiled an initial dataset supervised by experts in the field as the perfect starting point.

The patterns

The data gathered in our database allowed us to isolate basic patterns governing the evolution of text technologies throughout their life cycles. We have observed that text-based forms of communication often follow a linear pattern of evolution, from tokens through tablets and scrolls to codices.

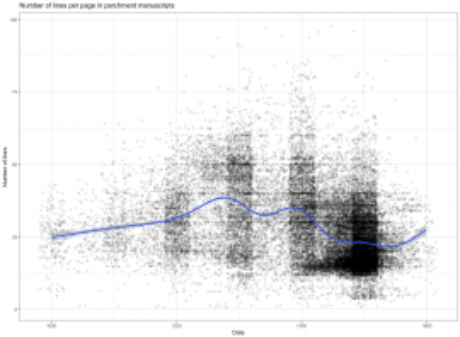

Those cycles are not uniform and tend to operate on different timescales and often repeat themselves inside different text technology types. Particular features of different technologies limit and influence each other. We have recognized patterns governing the relationships between such categories, such as interactivity and content permanence, or the total capacity and position in the life cycle. Having documented those patterns we have now moved from a curated dataset to big data applications.

The future

Now we are in a position to apply our findings to present-day and future text technologies, predicting their evolution, recognizing their weak and strong facets, and helping us all to better understand how we communicate. The second, current phase of CyberText is focused on applying the patterns we have learned from the analysis of CoDEX to bigger datasets of past text technologies. We have been working with the open catalogues of Schoenberg manuscripts database, British Library research datasets, and British Museum data, applying our metadata schema automatically to tens of thousands of objects. We are already seeing that the cycles we have recognized while working on CoDEX are to be found also in those datasets. It is an exciting moment to have obtained proof of concept. We can now begin to stipulate what makes a text technology most likely to succeed; what transient characteristics are most likely to emerge; and to predict the order of various features that are essential in making a successful text technology.